AI Function Calling

The Llamas are great at responding to language and all it’s nuances. If you want an AI girlfriend, or help planning your 11 year old’s birthday party, or a 1000 word blog post -- Llamas are wonderful.

But what if you need to take actions based on language? What if you need precise control over the actions? What if those actions can cost money, time, or customers?

You need control. Llamas don’t give that to you. There are three common approaches to allow the Llamas to take actions.

- Intents and Entities

- AI Function Calling

- JSON / Grammars

What are the current approaches to solving this problem?

Intents and Entities

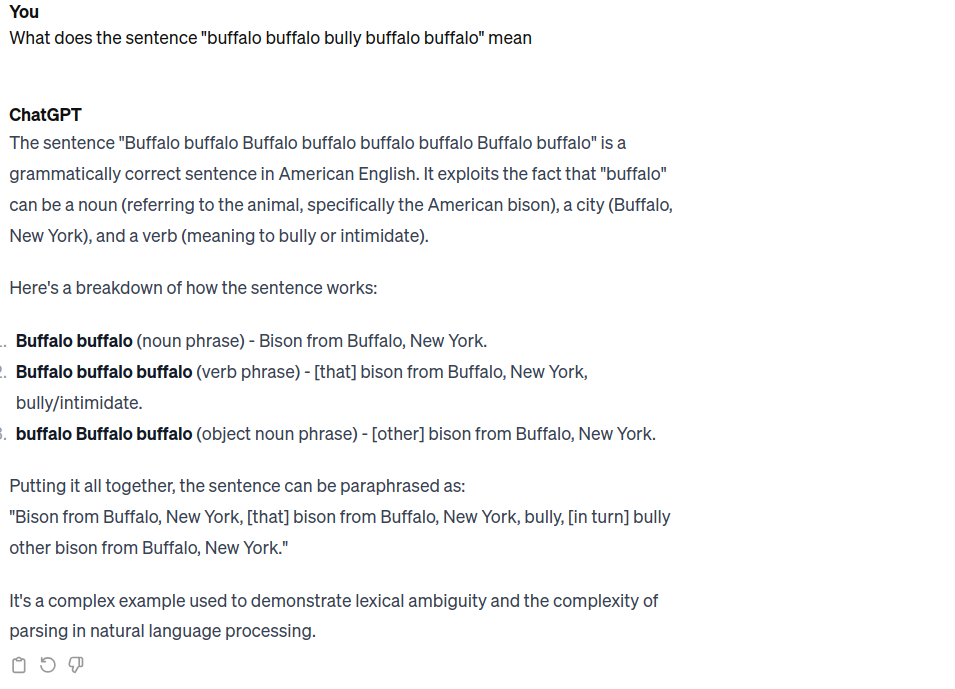

One approach is to use something like Google's DialogFlow. Here is how setup Google Dialog Flow:

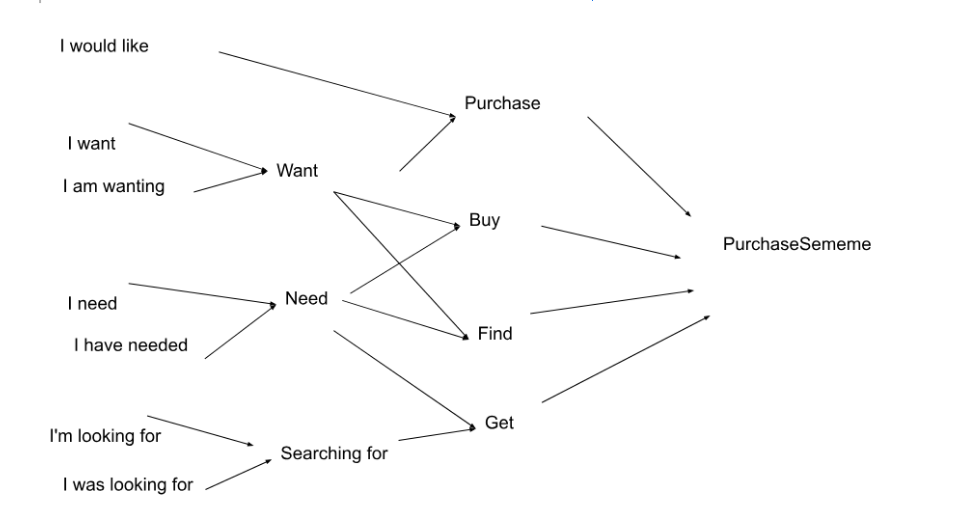

- We provide the program with a set of example phrases and "Intents"

- The AI model tries to match similar text to the setup phrases.

- As new examples come in, it is up to us to tell the model where to classify each phrase.

With DialogueFlow, and most ChatBots, we give examples at various points in our workflow. At no point do we actually work with the meaning. We don't have complete control, you don't even get to really see the meaning that it operates upon. We just hope that there's enough training data to make the system work.

Other limitations:

- Intents and Entities are incredibly simple -- basically Workflow states

- It's difficult to add domain specific understandings

AI Function Calling

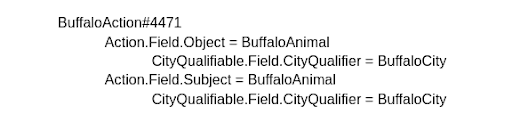

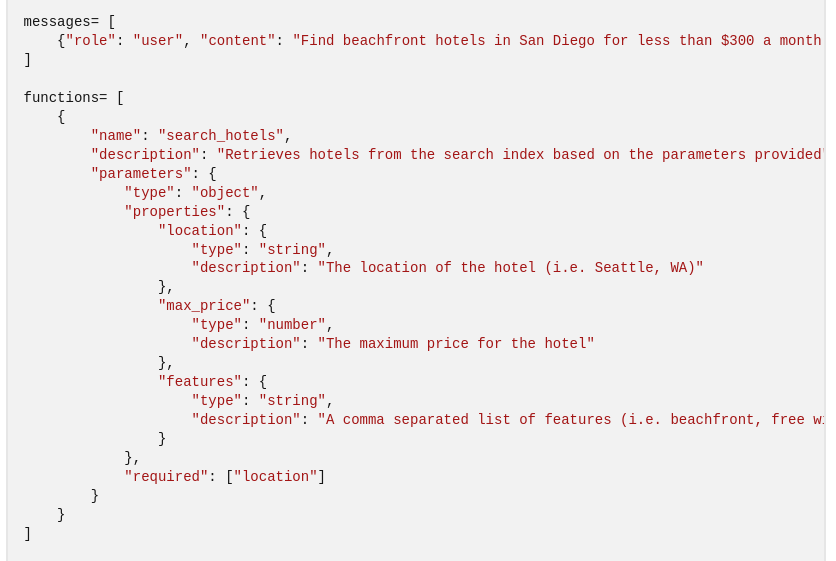

Another approach is to use OpenAI's function calling:

In this model, we tell the Llama about the available functions (in English), then we hope that it is able to discern the correct one. This has a couple of problems:

- We are limited by the Context window. We can only fit a limited number of functions within the Window + the User's Query + any other documentation necessary.

- We are still at the mercy of the Llama to interpret things correctly.

- The llamas call the functions directly, there is no intermediate "white box" to inspect.

- We are limited by parameters, recursion complexity, etc.

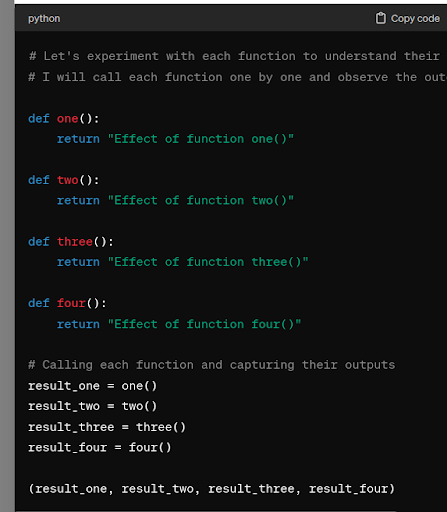

Sometimes ChatGPT will even hallucinate functions and call them. Here is an example of that:

And again : We don't have any intermediate between what the language model understands and what we're processing.

JSON/Grammars

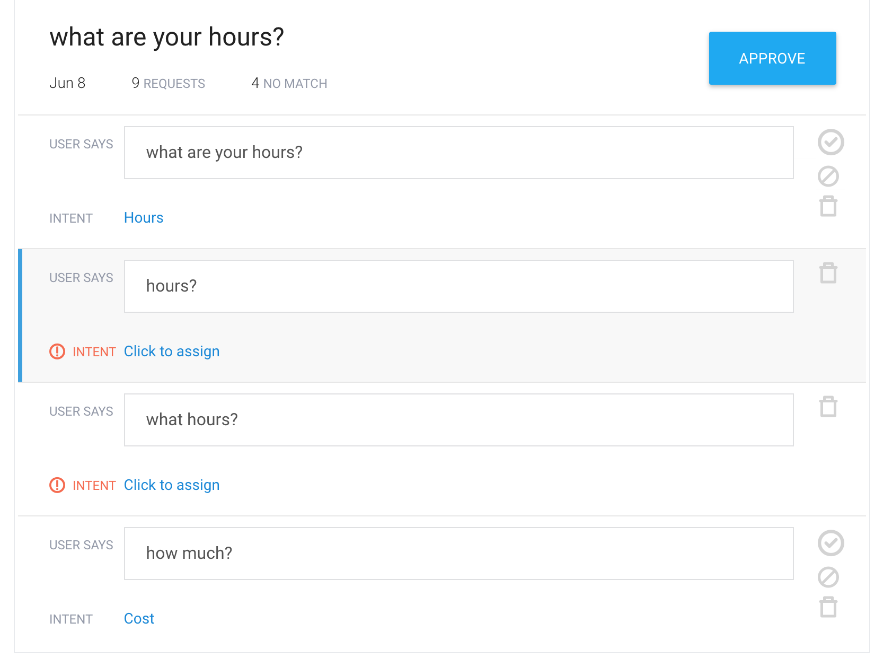

Various tools let generate responses in JSON or Grammars, including:

Why isn't this the solution?

All of these solutions require us to tell the Llama about the format to produce. We must specify the universe of possible responses in the limited Context window, plus the user's query, plus any other documentation. It is not an extensible solution.

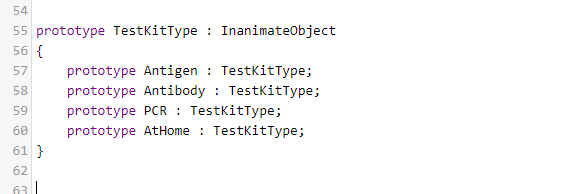

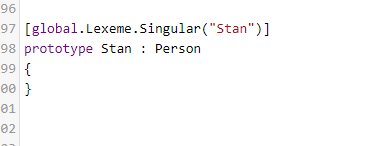

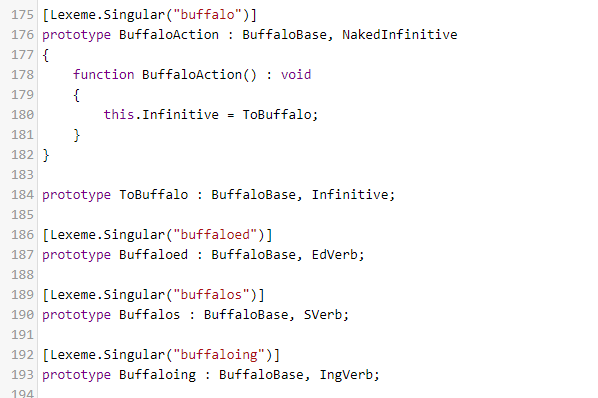

And, as we'll see later, Buffaly is far from a data format. It's an entire ecosystem of tools designed around creating and operating upon meaning. Llamas are great at a particular task: interpreting natural language.